Game AI

FIT ČVUT, ZS 2019

Ing. Adam Vesecký

vesecky.adam@gmail.com

Czech technical University in Prague

Faculty of Information Technology

Department of Software Engineering

© Adam Vesecký, MI-APH, 2019

Game AI overview

Artificial intelligence in games

- a broad set of principles that generate behaviors, providing an illusion of intelligence

- the second greatest challenge after graphics

- do not mistake for the academic AI

- If you have a stunning FPS game, who cares whether or not the NPCs really work together as a team as long as the player believes they actually do?

AI techniques

AI techniques

AI in game history

Doom 1 (1993)

- monster infighting (two AI characters encounter each other)

Age of Empires (1997)

- AI is smart enough to find and revisit other kingdoms, poor in self-defense, though

Half-life (1998)

- monsters can hear and track players, flee when getting defeated etc.

F.E.A.R. (2005)

- tactical coordinating with squad members, suppression fire, blind fire

ARMA 3 (2013)

- efficient issue ordering, situation-based decisions, retreats, ambush

DOTA 2 (2013)

- OpenAI bot (since 2017) has beaten some of the greatest players

Navigation

Navigation

- navigation is essential for AI to accomplish tasks

- local navigation in an environment (collision avoidance, ORCA)

- global navigation (pathfinding)

- navigation in a graph of case-based tasks

- choreographed formations

Navigation graph

- abstraction of all locations in a game environment the agents may visit

- enables game agents to calculate paths that avoid water, prefer traveling on roads to forest,...

- may carry additional attributes (functions, type of a crossing etc.)

- waypoint-based, mesh-based, grid-based

- Node - position of a key area within the environment

- Edge - connection between those points

Navigation graph

Waypoint-based

- level designer places waypoints that are later linked up

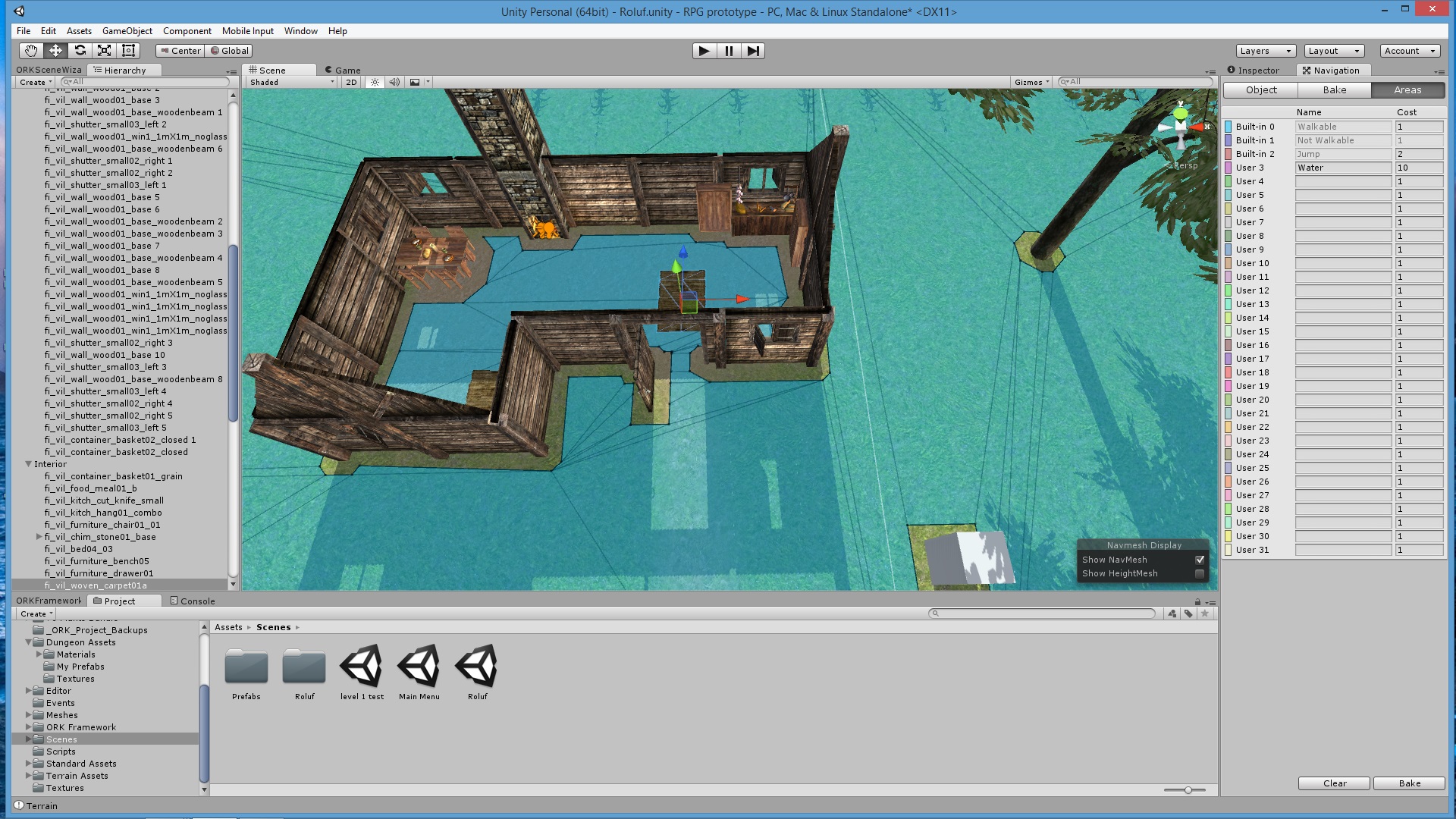

Mesh-based

- created from a polygonal representation of the environment's floor

- describes walkable areas

Example: Unity mesh editor

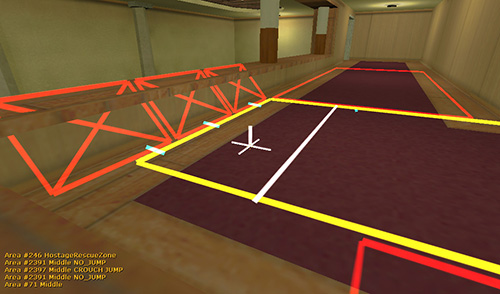

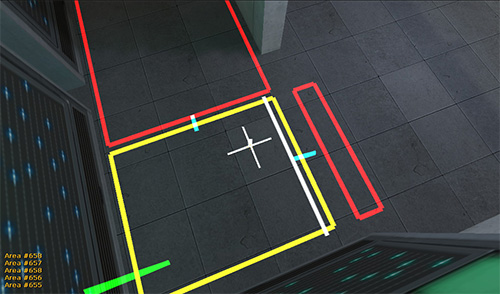

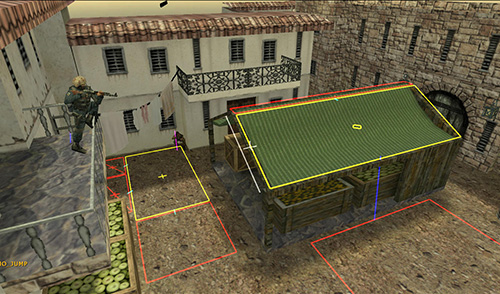

Example: Counter-strike

Navigation graph

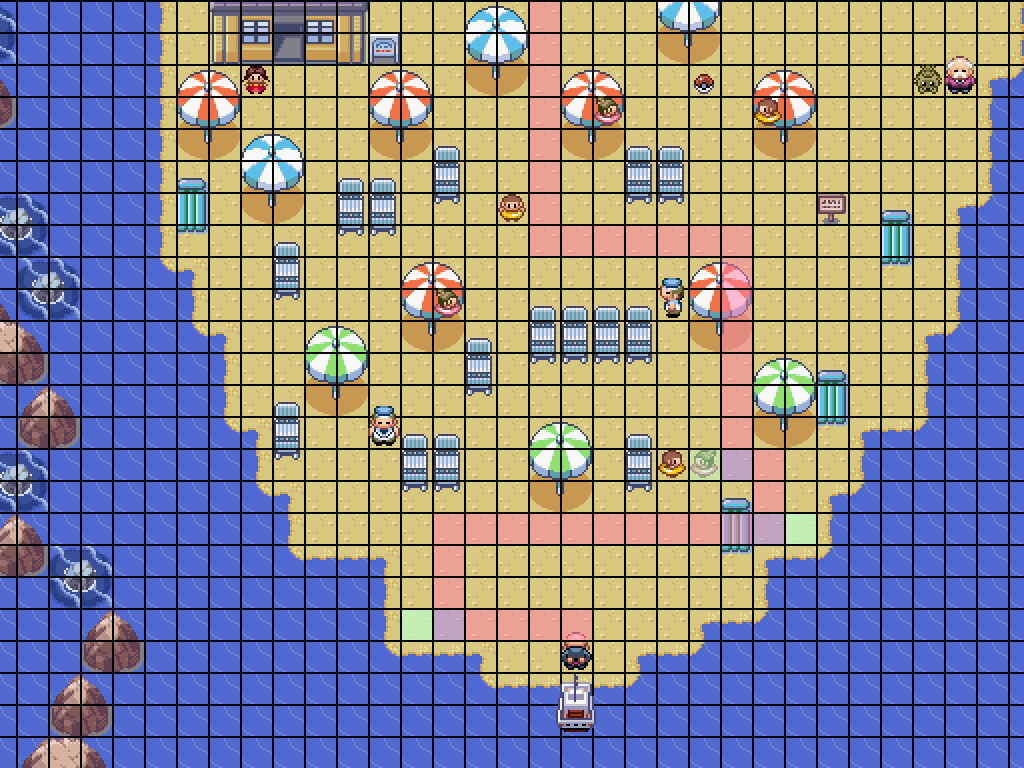

Grid-based

- created by superimposing a grid over a game environment

- traversability flag indicates whether the cell is traversable or not

- connection geometries: tile, octile, hex

- reflecting environmental changes = recalculation of the traversability flag

Example: Connection geometry

Pokémon series

OpenTTD

Heroes of M&M

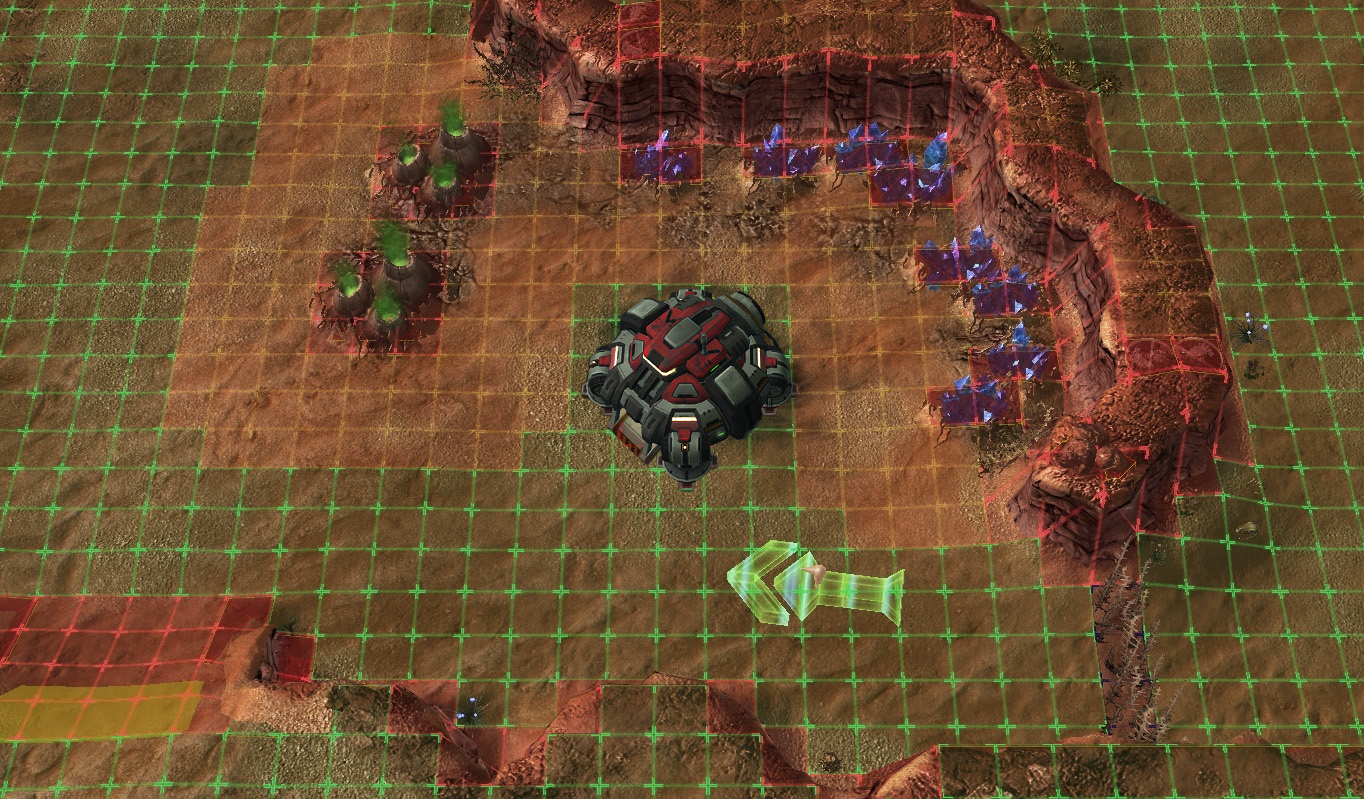

Combined geometry

- units can move in any direction, static objects are located on a grid

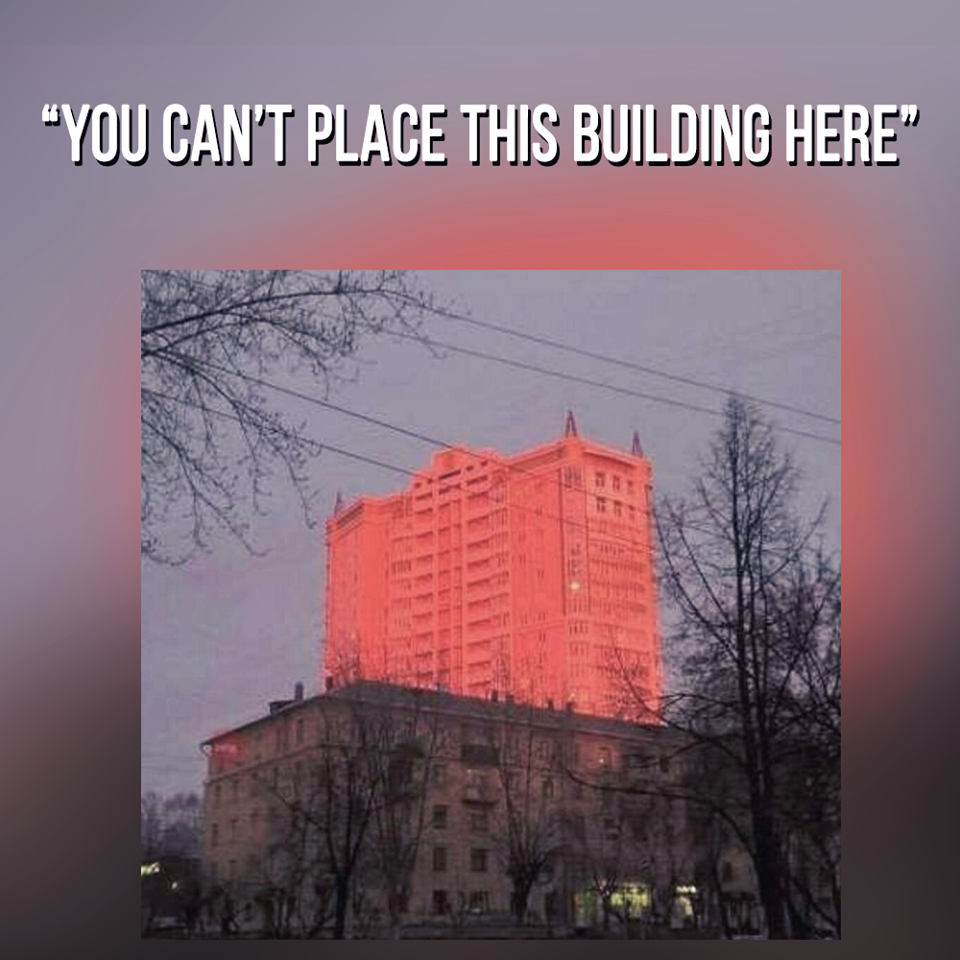

Starcraft II

Pathfinding

Properties

- completeness - find a solution

- optimality - quality of the solution returned

- smoothing - whether the agent could move along the path smoothly

- steering behavior can be used to smooth the path

Environment type

- static - the map never changes during the gameplay

- dynamic - areas previously traversable can be obstructed later

- the higher the dynamicity, the higher the number of replanning

NPC movement

- find the closest graph node to the NPC's current location: A

- find the closest graph node to the target location: B

- find path from A to B

- move to A

- move along the path to B

- move from B to target location

Pathfinding algorithms

Uniformed graph searches

- searches a graph without regard to any associated edge cost

- DFS (depth-first search)

- searches by moving as deep into the graph as possible

- doesn't guarantee to find the best path

- BFS (breadth-first search)

- fans out from the source node, always finds the best path

Cost-based graph searches

- Dijkstra's Algorithm

- explores every node in the graph and finds the shortest path from the start node to every other node in the graph

- uses CSF (cost-so-far) metric

- explores many unnecessary nodes

- A* (Dijkstra with a Twist)

- extension of Dijkstra, first described in 1968

- main difference: augmentation of the CSF value with a heuristic value

A*

- improved Dijkstra by an estimate of the cost to the target from each node

- Cost , where is the cost-so-far and is the heuristic estimate

- Heuristics: Euclidean, Manhattan, adaptive (penalty for direction change)

- Manhattan distance will work if almost no obstacles appear

- Improvements

- preprocess the map, calculate universal paths

- mark tiles which cannot lead anywhere as dead-ends

- limit the search space

Pathfinding algorithms: comparison

- breadth-first search ignores costs

- Dijkstra ignores position of the target

- A* takes into account both of them

HPA*: Hierarchical pathfinding A*

- uses a fixed-size clustering abstraction (problem subdivision)

- several superimposed navigation graphs of different granularities

- divides the navgraphs into regions

- gate - longest obstacle-free segment along a border

- transition across clusters are connected by intra-edges

- fast enough for most games

- 3 stages: build an abstract graph, search the abstract graph for an abstract path, refine the abstract path into a low-level path

Example: OpenTTD

- Business simulator, derived from Transport Tycoon Deluxe

- many challenges to AI

- management over particular transport types

- financial issues handling (loan control)

- maintenance and vehicle replacement

- acceleration model

- upgrades, road extending

- pathfinding, partial resuability of existing roads

- vehicle utilization, failures, traffic

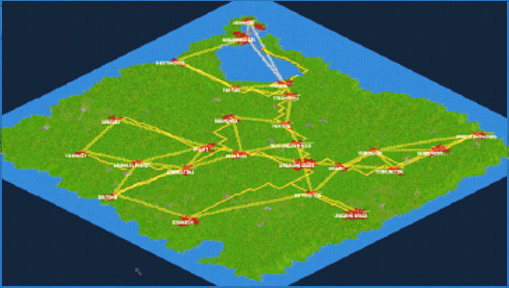

OpentTD AI

PathZilla AI

- advanced graph theory

- Delaunay Triangulation

- roads based on minimum spanning tree

- queue of planned actions

trAIns

- most scientific approach, evolved from a research project in 2008

- double railways support

- concentration of production

AdmiralAI

- attempt to implement as many features from game API as possible

- the oldest and most comprehensive AI for OpenTTD

NoCAB

- very fast network development and company value grow

- custom A* implementation designed for speed

- can upgrade vehicles

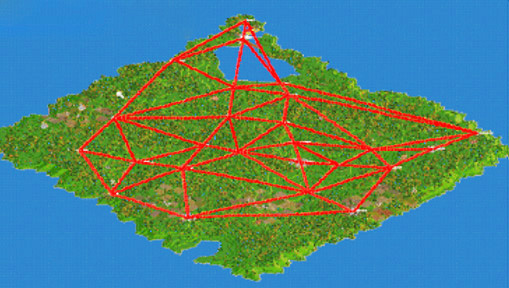

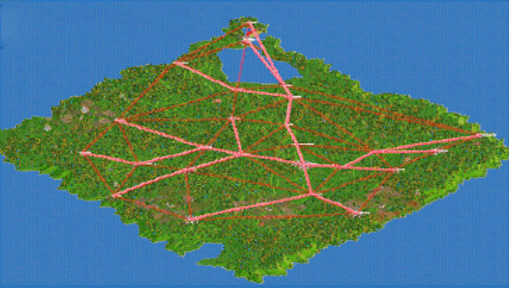

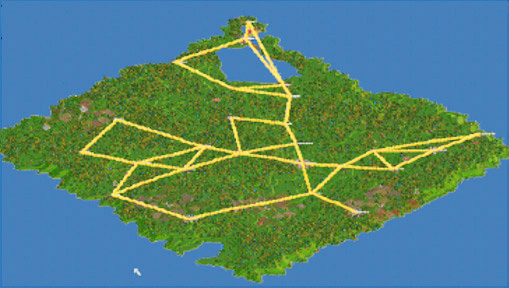

Example: Pathzilla road analysis

Triangulation

Shortest path tree

Min spanning tree

Planning graph

Final road network

Basic AI techniques

Scripting

- IF-THIS-THEN-THAT approach

- AI behavior is completely hardcoded

- simple, easy to debug, easy to extend if programmed properly

- human player should behave as the developers expect

- good scripting behavior must cover a large amount of situations

// Doom 2: find player to chase

void A_Look (mobj_t* actor) {

mobj_t* targ;

actor->threshold = 0; // any shot will wake up

targ = actor->subsector->sector->soundtarget;

if (actor->flags & MF_AMBUSH){

if (P_CheckSight (actor, actor->target))

goto seeyou;

} else goto seeyou;

if (!P_LookForPlayers (actor, false)) return;

// go into chase state

seeyou:

P_ChasePlayer();

}

Finite state machine

- the oldest and most commonly used formalism to model game AIs

- useful for an entity whose behavior changes based on an internal state that can be divided

into small number of distinct options - each game entity can be in exactly one of a finite number of states at any time

- Definition

- quadruple

- is a finite, non-empty set of states

- is a finite set of inputs

- is the state-transition function

- is an initial state,

- can be implemented via polymorphism or a state transition table

- unmanageable for large complex systems, leading to transition explosion

Example: Pacman FSM

Example: Pacman transition table

| State | Transition | Condition |

|---|---|---|

| Wander the maze | Chase pacman | Spot Pacman |

| Wander the maze | Flee Pacman | PowerPellet eaten |

| Chase Pacman | Wander the maze | Lose Pacman |

| Chase Pacman | Flee Pacman | PowerPellet eaten |

| Flee Pacman | Return to Base | Eaten by Pacman |

| Flee Pacman | Wander the maze | PowerPellet expires |

| Return to Base | Wander the maze | Reach Central base |

Example: Warcraft Doomguard

Example: Warcraft Doomguard

- let's add an ability to fall asleep

Hierarchical state machine

- also known as statecharts

- each state can have a superstate or a substate

- groups of states share transitions

- usually implemented as a stack

- push a low-level state on the stack when enter

- pop and move to the next state when finished

Behavior tree

- tree of hierarchical nodes that control decision making process

- originate from gaming industry around 2004 (Halo 2)

- combine elements from both Scripting and HFSMs

- there is no standardized formalization

- inner nodes lead to appropriate leaves best suited to the situation

- depth-first traversal, starting with the root node

- each executed behavior passes back and returns a status

- SUCCESS, FAILURE, RUNNING, (SUSPENDED)

Behavior tree

| Node Type | Success | Failure | Running |

|---|---|---|---|

| Selector | If one child succeeds | If all children fail | If one child is running |

| Sequence | If all children succeed | If one child fails | If one child is running |

| Decorator | It depends... | It depends... | It depends... |

| Parallel | If N children succeed | If M-N children succeed | If all children are running |

| Action | When completed | Upon an error | During completion |

| Condition | If true | If false | Never |

Behavior tree node types

Selector

- tries to execute its child nodes from left to right until it receives a successful response

- when a successful response is received, responds with a success

Sequence

- will execute each of its child nodes in a sequence from left to right until a failure is received

- if every child node responds with a success, the sequence node itself will respond with a success

Decorator

- allows for additional behavior to be added without modifying code

- only one child node

- returns success if the specified condition is met and its subtree has been executed successfully

- Example: Repeater, Inventer, Succeeder, Failer, UntilFail, UntilSucceed

Behavior tree node types

Parallel node

- a node that concurrently executes all of its children

Condition

- a node that will observe the state of the game environment and respond with either a success or a failure based on the observation, condition etc.

- Instant Check condition - the check is run once

- Monitoring condition - keeps checking a condition over time

Action

- a node used to interact with the game environment

- may represent an atomic action, a script or even another BT

Blackboard

- a construct that stores whatever information is written to it

- any node inside or outside the tree has an access to the blackboard

- similar to GameModel attribute of component architecture's root node

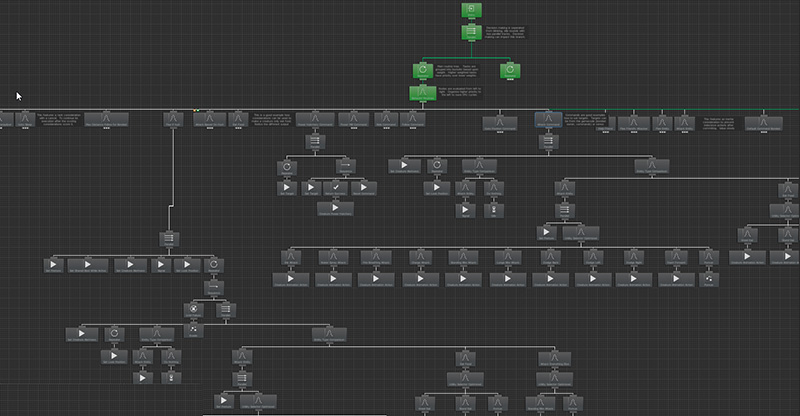

Example: Unreal Engine BT Editor

Example: Warcraft Doomguard

BT improvements

- let's define a conditional selector in order to simplify the diagram

Example: Door opening

Advanced AI techniques

Terms

Bot

- an intelligent artificial player emulating a human player

- used mainly to describe AI in FPS game

NPC

- non-playable character - doesn't play the game but rather interacts with the player

Planning

- a formalized process of searching for sequence of actions to satisfy a goal

Supervised learning

- works just like learning at schools

- for certain input there is a correct output the algorithm has to learn

Unsupervised learning

- the output cannot be categorized as being either correct or false

- the algorithm learns patterns instead

Reinforcement learning

- an agent must learn the best possible output by using trial and error

- for each action chosen the agent obtains a feedback

Challenges for Game AI

Game AI features and limits

- real-time

- limited resources

- incomplete knowledge

- planning

- learning

Game AI properties

- predictability and unpredictability (surprise elements)

- support - communication between NPC and a player

- surprise - harassment, ambush, team support

- winning well and losing well

- cheating - acceptable as long as it doesn't get detected by the player

We want it to be fun to play against, not beat us easily

Named AI techniques in games

- Starcraft (1998) - particle model for hidden units' position estimation

- Unreal Tournament (1999) - Bayesian Behaviors

- Quake III (1999) - HFSM for decisions, AAS (Area Awareness System) for collisions and pathfinding

- Halo 2 (2004) - Behavior Trees and Fuzzy Logic

- F.E.A.R. (2005) - GOAP (goal-oriented action planning)

- Arma (2006) - Hierarchical Task Networks (HTN) for mission generator

- Wargus (Warcraft II Clone) - Case-Based reasoning

- City Conquest (2011) - genetic algorithm used to tune dominant strategies

- Tomb Raider (2013) - advanced GOAP

AI Model

- AI model is just another representation of the game world

- must be simple enough for the AI to process it in real-time and still complex enough

to produce a believable behavior - Mario could be controlled either by an evolutionary algorithm or just a simple script with pathfinding, enemy detection and the ability to jump over enemies

View Model

Physics Model

AI Model

Predicting opponents

Cheating bots are not fun in general

- inhumanly accurate aim in shooting games, bots that know where you are,...

Partial observations of the game state

- AI is informed in the same way as the human player

- the mistakes these models make are more human-like

- filtering problem - estimating the internal states based on partial observations

- general approaches:

- Hidden semi-markov model

- Particle filters

- approaches for FPS:

- Occupancy map

- SOAR cognitive architecture (used in Quake II)

- Vision Cone (used in Bioshock)

- approaches for RTS:

- Threat map

- Influence map

- Bayesian networks

Occupancy map

- a grid over the game environment

- maintains probability for each grid cell

- probability represents the likelihood of an opponent presence

- when the opponent is not visible, the probabilities from the previous occupancy cells are propagated along the edges to neighboring cells

Threat map

- used in RTS

- the value at any coordinate is the damage that the enemy can inflict

- updating is done through iterating the list of known enemies

- can be easily integrated into pathfinding

- Example: combat units bypass enemy defensive structure in order to attack their infrastructure

Influence map

- more generalized than threat maps

- helps the AI to make better decisions by providing useful information

- situation summary (borders, enemy presence)

- statistics summary (number of visits, number of assaults)

- future predictions

- Example: number of units killed during last strike

Reinforcement learning

- learning what to do and how to map states to actions to maximize a reward

- based on its action, the agent gets a reward or punishment - these rewards are used to learn

- Reward - informs the agent to which outcome the selected action leads

Algorithms

- Genetic Evolution

- Q-Learning

- SARSA (State-Action-Reward-State-Action)

- Simulated annealing

- Monte-Carlo simulation

Models

- Decision trees

- Bayesian networks

- Neural networks

Artificial neural network

- computational model that attempts to simulate a biological neural network

- network of nodes are connected by links that have numeric weights attached

- backpropagation - measure of divergence between the observed and the desired output

Neural network applications

MarI/O

- NEAT - genetic algorithm for evolving neural networks

- Input: simplified game screen (13x13 blocks)

- Output: pressed button (6 neurons)

- Max neurons: 1 000 000

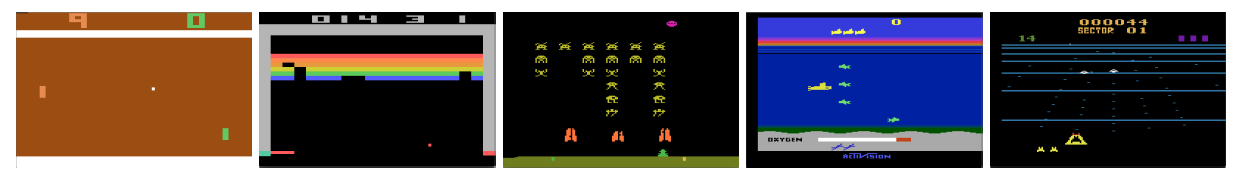

DeepMind 2014

- neural network that can play Atari games (uses Q-learning)

- Inputs: 210x160 RGB at 60Hz, terminal signal and game score

Goal-oriented action planning

- centers on the idea of goals as desirable world states

- each action has a set of conditions it can satisfy, as well as a set of preconditions that

must be true in order to be satisfied - originally implemented for F.E.A.R (2005)

- two stages: select a relevant goal and attempt to fulfill that goal

Example: F.E.A.R.

- Advanced AI behavior: blind fire, dive through windows, take cover,...

- the key idea: to determine when to switch and what parameters to set

Example: F.E.A.R.

- a set of goals is assigned to each NPC

- these goals compete for activation, and the AI uses the planner to try to satisfy the highest priority goal

- the AI figures out the dependencies at run-time based on the goal state and the preconditions and effects of actions

| Soldier | Assassin | Rat |

|---|---|---|

| Attack | Attack | Animate |

| AttackCrouch | InspectDisturbance | Idle |

| SuppressionFire | LookAtDisturbance | GotoNode |

| SupressionFireFromCover | SurveyArea | UseSmartObjectNode |

| FlushOutWithGrenade | AttackMeleeUncloaked | |

| AttackFromCover | TraverseBlockedDoor | |

| BlindFireFromCover | AttackFromAmbush | |

| ReloadCovered | AttackLungeUncloaked |

AI in Real-time strategies

Real-time strategy

- Real-time strategy is a Bayesian, zero-sum game (Rubinstein, 1994)

- A game where the player is in control of certain, usually military, assets, with which the player can manipulate in order to achieve victory

Main elements

- map, mini-map

- resources

- units and their attributes

- buildings

- tech tree

Other features

- real-time aspect (no turns)

- fog of war

- many strategies

- game modes: campaign, skirmish, multiplayer

RTS games

RTS features

Resource control

- minerals, gas, oil, trees etc.

- controlling more resources increases the players' construction capabilities

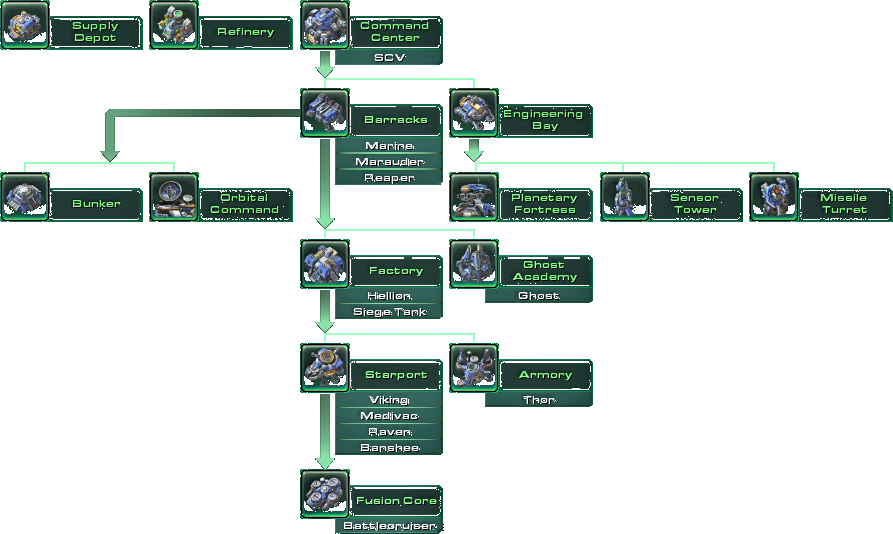

Tech tree

- a directed acyclic graph that contains the whole technological development of a faction

Build order (opening)

- the timings at which the first buildings are constructed

Fog of war

- fog that covers the parts of the map the player has not yet explored

- requires to scout unexplored areas to find enemy sources

Micromanagement

- way of controlling units in detail while they are in combat

Tactics

- a set of specific actions used when applying a strategy

Strategy

- making decisions knowing what we saw from the opponent

- we can prioritize economy over technology or military production

Example: Starcraft 2 tech-tree

RTS strategies

Strategies

- rush strategy

- hurrying into one or more early attacks

- countering strategy

- surviving an attack and performing a counter-attack

- siege

- playing defensively, utilizing heavy weaponry and performing a set of massive attacks

- tech strategy

- spending resources in early game on enabling the player to construct more advanced combat units

Turtling

- player that gets into a defensive position but is unable to expand

Scouting

- sending units to places the player suspects there might be enemies and possible discovering their location and progress

RTS AI layers

- Three layers (architectural perspective)

- micromanagement

- tactics (army positions)

- strategy (tech tree)

AI in real-time strategies

- a goal in the development of an AI is to make the human player identify the computer opponent as an opposing player and not just a set of algorithms

- in early games, AI attacked at intervals in small waves of units, emulating a sense of action

- approaches: manager-based AI, layer-based AI, CBR, rule-based AI, reinforcement learning

Manager-based AI

Unit micromanagement AI

- controls units that are in combat

Medium-level strategic AI

- in control of 5-30 units

High-level strategic AI

- overall strategy, determines a specific playing style

Worker unit AI

- controls workers and their goals

Town building AI

- builds a town/base in response to the tech tree

Opponent modelling AI

- process of keeping track of what units the enemy builds

Resource management AI

- manages gathering resources

Reconnaissance AI

- finding out what is happening on the map

Layer-based AI

- AI is built from multiple layers

- each layer handles specific task

- there can be lower-level and higher-level decision components

- decision components consider available information, retrieved by lower components, to make decisions; executors are triggered afterwards

- Sensor - reads the game state

- Analyzer - combines sensing data to form a coherent picture

- Memorizer - stores various types of data (terrain analysis, past decisions,...)

- Decider - a strategic decider, determines goals

- Executor - translates goals into actions

- Coordinator - synchronizes groups of units

- Actuator - executes actions by modifying the game state

Example: Layer-based AI

Example: Layer-based AI

Case-based reasoning

Main idea

- when making decisions, we remember previous experiences and then reapply solutions that worked in previous situations

- if a certain situation hasn't been encountered yet, we recall similar experience and then adjust the solution to fit the current situation

- takes the current situation, compares it to a knowledge base of previous cases, selects the most similar one, adjusts it and updates the base

Steps

- Retrieve - get the cases which are relevant to the given problem

- Reuse - create a preliminary solution from selected cases

- Revise - adapt the preliminary solution to fit the current situation

- Retain - save the case into the knowledge base

Example: Defcon

- multiplayer real-time strategy game

- players compete in a nuclear war to score points by hitting opponent cities

- stages: placing resources, fleet maneuvres, fighter attacks, final missile strikes

- AI: decision tree learning and case-based reasoning

Defcon: Case structure

- starting positions of units and buildings

- metafleet movement and attack plan

- performance statistics for deployed resources

- abstraction of the opponent attacks (time-frame clustering)

- routes taken by opponent fleets

- final scores of the two players

Example: Megaglest

- open-source 3D RTS

- seven factions: tech, magic, egypt, indians, norsemen, persian, romans

- rule-based AI

Megaglest rules

| Rule | Condition | Command | Freq [ms] |

|---|---|---|---|

| AiRuleWorkerHarvest | Worker stopped | Order worker to harvest | 2 000 |

| AiRuleRefreshHarvester | Worker reassigned | 20 000 | |

| AiRuleScoutPatrol | Base is table | Send scout patrol | 10 000 |

| AiRuleRepair | Building Damaged | Repair | 10 000 |

| AiRuleReturnBase | Stopped unit | Order return base | 5 000 |

| AiRuleMassiveAttack | Enough soldiers | Order massive attack | 1 000 |

| AiRuleAddTasks | Tasks empty | Add tasks | 5 000 |

| AiRuleBuildOneFarm | Not enough farms | Build farm | 10 000 |

| AiRuleResourceProducer | Not enough resources | Build resource producer | 5 000 |

| AiRuleProduce | Performing prod. task | 2 000 | |

| AiRuleBuild | Performing build task | 2 000 | |

| AiRuleUpgrade | Performing upg. task | 2 000 | |

| AiRuleExpand | Expanding | 30 000 | |

| AiRuleUnBlock | Blocked units | Move surrounding units | 3 000 |

Lecture 9 summary

- Navigation graph: abstraction of all locations in a game environment

- waypoint-based, mesh-based, grid-based (tile, octile, hex)

- A*: path-finding algorithm that uses an estimate of the cost to reach the target

- FSM: structure used to model a simple behavior

- each game entity can be in exactly one of a finite number of states at any time

- Behavior Tree: tree of nodes that control a decision making process

- nodes: selector, sequence, parallel, decorator, action, condition

- Terms: Bot, NPC, Planning, Supervised learning, Unsupervised learning, Reinforcement learning

- GOAP: centers on the idea of goals as desirable world states

- RTS AI Layers: micromanagement, tactics, strategy

- Case-based reasoning: finding solutions based on similar past solutions

Goodbye quote

Taking fire, need assistance!Counter-Strike